A New Category of Conversation: In Conversation About AI with Eric Hudson

Eric Hudson is a facilitator and strategic advisor who supports schools in making sense of what’s changing in education and on the role of technology in K-12 schools. What follows is a conversation between Eric and R.E.A.L.® founder Liza Garonzik. This conversation has been lightly edited for clarity.

Liza: Welcome, Eric, to Destination: Discussion. We’re so glad to have you here today. We’ve seen your name all over the independent school world, and we think of you as kind of the AI Scout for K-12 education. How did you get there? Tell us your story.

Eric: I’m an independent consultant now, and I’ve been on my own for about 18 months, but I started my career in the classroom. I was a middle and high school English teacher for about 12 years, then I spent a decade at Global Online Academy. When I was teaching, I got super interested in technology – specifically, technology as a tool that could empower students to become more independent learners and to do new things.

My interest in that fit really well with what GOA has been working on for a long time. While I was there, ChatGPT came about. In everything I do, I bring the lens of “how do we learn about this technology in a way that can help schools and teachers and kids do better work?”

Liza: I really appreciate your teacher-first approach, because I think it’s so easy to opine on what kids should and shouldn’t be doing. To empathize with the teacher in terms of experimenting while setting clear boundaries is so important. And by the way, ChatGPT is not the only revolutionary technology kids and teachers will encounter in their lifetimes / careers!

At R.E.A.L.®, as you know, we’re obsessed with what we think of as the deeply human art of great discussion, especially in an AI world. One reason I reached out to you was because you’d written a blog post sharing your experiences discussing with an AI bot. That’s something we know our students are also experimenting with. What’s it like to converse with AI? What are its strengths, and where does it fall short?

Eric: It depends on the tool. One thing I have to say a lot in my work is that we tend to use “ChatGPT” the way we use words like “Kleenex” or “Xerox.” It’s really just one tool that’s come to represent a much broader field.

When you talk about talking to AI, there are all different things you can talk to. I was talking to advanced voice mode in the ChatGPT app. In general, where the technology is right now is that you interact with a human-sounding, podcast-quality voice that responds to your voice almost in real time. There’s a bit of a delay, and it doesn’t, at least for me, replicate exactly what a human conversation is, but it’s certainly way better than I thought it would be.

I’ve done sessions where I opened up advanced voice mode in a room full of teachers, and I explained to the bot that I’m in a room full of teachers, and I asked what questions it had for the group. The bot responds with questions that are aligned to the audience and relevant to the topic, and it answers teacher questions in a coherent, compelling way. It feels flat in the way that a lot of interactions with technology do, but it’s certainly better than a lot of other tools I’ve used.

Liza: Can you say more about the flatness?

Eric: I just wrote a post about Annie Murphy Paul’s Book, The Extended Mind. One of the ways we learn is through nonverbal cues in our interactions with other people. When you’re interacting with a voice bot, you’re just getting the voice. You’re not getting a person, which is the first thing, but you’re also not getting expressions. You’re not getting physical reactions. You’re not able to assess – nor is it worth trying to assess – the emotions of the bot. You’re losing a lot of the nuance that goes around the kinds of interactions you would typically have with a human being, which are huge.

When you’re interacting with a voice bot, you’re just getting the voice. You’re not getting a person, which is the first thing, but you’re also not getting expressions. You’re not getting physical reactions. You’re not able to assess – nor is it worth trying to assess – the emotions of the bot. You’re losing a lot of the nuance that goes around the kinds of interactions you would typically have with a human being.

Eric Hudson

Liza: That’s something we think a lot about – the fact that conversation unlocks the deepest human experiences. Conversation is the foundation of friendship, partnership, leadership, citizenship. There are neurobiological elements of conversation that feel impossible to imagine AI replicating perfectly … ever! What do you think conversations with various AI tools could look like in 5, 10, 15 years?

Eric: I can’t predict the future – that’s way above my pay grade. But I will say: we’re already seeing sort of realistic AI-generated avatars. You can speak with a very human-looking avatar with facial expressions who will react to what you say.

We’re also seeing tools in the accessibility space that bring multimodal communication to folks who are hearing-impaired or visually impaired. One thing we should expect is maybe not that this technology will replace the value of human interactions, but that it will create a new category of interaction, where we’ll be interacting with bots for certain things. It’s this new category of conversation that we need to prepare for.

Technology…will create a new category of interaction.

Eric Hudson

Liza. That’s fascinating. The idea of transactional communication being outsourced or technologically enabled is intuitive. A new category of conversation and even companionship is pretty fascinating….

What do you see as the uniquely human skills that AI will never be able to replicate?

Eric: Have you seen the movie Her? It stars Joaquin Phoenix as a guy who lives in the near future and falls in love with a bot voiced by Scarlett Johansson. I think if you’re going to watch anything to try to understand what AI is going to do in 10 years, I think that movie is the best representation of where we are right now and where we’re headed in the short term. That movie is about vulnerability – and the thing that worries me about technology is how we’ll answer big questions like, what does it mean to be vulnerable with another person? What does it mean to be vulnerable in conversation? Bots eliminate that.

My biggest takeaway from interacting with these voice bots is that it feels very private in a way that is reassuring in certain ways and worrying in other ways. There’s just not a lot at stake for me when I’m talking to a bot. I can tell it anything, and it’s not going to judge me. I can tell it anything, and I’m not really worried about how it’s reacting. I think that’s great for certain use cases, and it’s a real concern for others.

There’s just not a lot at stake for me when I’m talking to a bot. I can tell it anything, and it’s not going to judge me. I can tell it anything, and I’m not really worried about how it’s reacting. I think that’s great for certain use cases, and it’s a real concern for others.

Eric Hudson

Liza: Yes, appreciate that nuance and absolutely. Now let’s rewind and go back to your classroom teacher days – what do you think are some ways that AI can integrate or support class discussion?

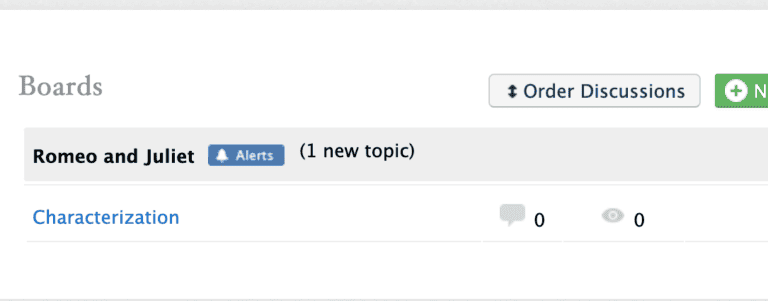

Eric: I’m already seeing English teachers that I’m working with try different things. One thing that predated ChatGPT is the idea of all these kinds of AI meeting tools that can record a discussion, track talk time, track filler words, and offer transcription and analysis of what’s going on. They can be your teaching assistant in tracking, monitoring, and getting quantitative data out of discussion.

I’m also seeing teachers who run discussion-based, Harkness-style classrooms use AI in a targeted way to support students who don’t bring a lot of discussion competencies to class. They’re asking students to use these bots to practice for live discussion, so they can enter a discussion feeling more empowered to contribute.

I used to run Harkness a lot in my English classroom. It’s a great pedagogy, but it’s also inequitable in a lot of ways, because it really does have a bias toward certain skill sets and personality traits. I think AI could be an assistive technology for students who maybe don’t naturally excel in that kind of format.

Liza: We couldn’t agree more. We’re very excited about the ways in which technology can support making classroom discussions more equitable, more rigorous, and ultimately allow everyone to engage in the whole-group discussion as a deeply human endeavor!

OK, two of our favorite questions as we wrap up. First: what is your greatest fear for K-12 education? And on the flip side, what’s your greatest hope?

Eric: Specifically related to AI’s impact on students, I’m worried about the vulnerability thing. I think there’s a lot of discourse around how learning requires friction, and AI eliminates friction. That’s very real and very valid. But learning also requires vulnerability, and I think that’s harder to measure and design for. I’m very concerned about the ease with which we can retreat into AI tools, rather than take certain higher-stake risks.

Learning requires vulnerability, and I think that’s harder to measure and design for. I’m very concerned about the ease with which we can retreat into AI tools, rather than take certain higher-stake risks.

Eric Hudson

My other fear is that K-12 education doesn’t change in response to the advent of AI. I’m concerned that people will try to white-knuckle their way through this, and the meaningful changes that technology has been pushing on education would continue not to happen. I think that’s really a disservice to kids.

I’m full of compassion for schools about this – it’s really hard and complex, but it’s also a call to action to change in certain meaningful ways.

Liza: And what’s your greatest hope?

Eric: My greatest hope is that we start listening to students in all of this. When I speak to kids in my work, their perspectives on this are as diverse and nuanced as any adult’s, and I don’t think we’re talking about the student experience and perspective seriously enough when it comes to this technology.

When I talk to students about AI, they do not ask me about cheating. They do not ask me about school. They ask me about their futures. They ask me about the world they’re going to enter. They ask me about the fact that for the rest of their lives they’re going to have to be making decisions about generative artificial intelligence. That piece of it makes me hopeful that schools will engage students at that level and make choices for students that reflect the fact that this is something that is going to affect the rest of their lives and ours.

When I talk to students about AI, they do not ask me about cheating. They do not ask me about school. They ask me about their futures.

Eric Hudson

This is not like a SmartBoard. This is technology that is not just going to affect the school, but also society and the workplace and citizenship. I’m really hopeful that schools embrace the challenge and ambition of those really big questions.

Liza: I love that. We really appreciate your centering student voice as your greatest hope for K-12, because we certainly agree that we should be doing that more than we are, and that there’s infinite possibility when we do. So, thank you. Thank you so much for being here and for sharing all of these thoughts.

Eric: Thank you for having me.